();

public SecurityMonitorWindow()

{

InitializeComponent();

// Set up 2x2 grid of camera views

for (int row = 0; row < 2; row++)

{

for (int col = 0; col < 2; col++)

{

var view = new VisioForge.Core.UI.WPF.VideoView();

Grid.SetRow(view, row);

Grid.SetColumn(view, col);

mainGrid.Children.Add(view);

cameraViews.Add(view);

// Create and configure camera

var camera = new VideoCapture();

camera.OnVideoFrameBuffer += (s, e) => view.RenderFrame(e);

cameras.Add(camera);

}

}

}

public async Task StartCamerasAsync()

{

for (int i = 0; i < cameras.Count; i++)

{

cameras[i].VideoSource = VideoSource.CameraSource;

cameras[i].CameraDevice = new CameraDevice(i); // Assuming cameras are indexed 0-3

await cameras[i].StartAsync();

}

}

}

```

## Troubleshooting Common Issues

### Handling Frame Synchronization

If you experience frame timing issues across multiple displays:

```cs

private readonly object syncLock = new object();

private void VideoCapture1_OnVideoFrameBuffer(object sender, VideoFrameBufferEventArgs e)

{

lock (syncLock)

{

foreach (var view in videoViews)

{

view.RenderFrame(e);

}

}

}

```

---

For more code samples and advanced implementation techniques, visit our [GitHub repository](https://github.com/visioforge/.Net-SDK-s-samples).

---END OF PAGE---

# Local File: .\dotnet\general\code-samples\onvideoframebitmap-usage.md

---

title: Mastering OnVideoFrameBitmap in .NET Video Processing

description: Learn how to manipulate video frames in real-time with OnVideoFrameBitmap events in .NET applications. This detailed guide provides practical code examples, performance tips, and advanced techniques for C# developers working with video processing in .NET SDK environments.

sidebar_label: OnVideoFrameBitmap Event Usage

---

# Mastering Real-Time Video Frame Manipulation with OnVideoFrameBitmap

[!badge size="xl" target="blank" variant="info" text="Video Capture SDK .Net"](https://www.visioforge.com/video-capture-sdk-net) [!badge size="xl" target="blank" variant="info" text="Video Edit SDK .Net"](https://www.visioforge.com/video-edit-sdk-net) [!badge size="xl" target="blank" variant="info" text="Media Player SDK .Net"](https://www.visioforge.com/media-player-sdk-net)

The `OnVideoFrameBitmap` event is a powerful feature in .NET video processing libraries that allows developers to access and modify video frames in real-time. This guide explores the practical applications, implementation techniques, and performance considerations when working with bitmap frame manipulation in C# applications.

## Understanding OnVideoFrameBitmap Events

The `OnVideoFrameBitmap` event provides a direct interface to access video frames as they're processed by the SDK. This capability is essential for applications that require:

- Real-time video analysis

- Frame-by-frame manipulation

- Dynamic overlay implementation

- Custom video effects

- Computer vision integration

When the event fires, it delivers a bitmap representation of the current video frame, allowing for pixel-level access and manipulation before the frame continues through the processing pipeline.

## Basic Implementation

To begin working with the `OnVideoFrameBitmap` event, you'll need to subscribe to it in your code:

```csharp

// Subscribe to the OnVideoFrameBitmap event

videoProcessor.OnVideoFrameBitmap += VideoProcessor_OnVideoFrameBitmap;

// Implement the event handler

private void VideoProcessor_OnVideoFrameBitmap(object sender, VideoFrameBitmapEventArgs e)

{

// Frame manipulation code will go here

// e.Frame contains the current frame as a Bitmap

}

```

## Manipulating Video Frames

### Simple Bitmap Overlay Example

The following example demonstrates how to overlay an image on each video frame:

```csharp

Bitmap bmp = new Bitmap(@"c:\samples\pics\1.jpg");

using (Graphics g = Graphics.FromImage(e.Frame))

{

g.DrawImage(bmp, 0, 0, bmp.Width, bmp.Height);

e.UpdateData = true;

}

bmp.Dispose();

```

In this code:

1. We create a `Bitmap` object from an image file

2. We use the `Graphics` class to draw onto the frame bitmap

3. We set `e.UpdateData = true` to inform the SDK that we've modified the frame

4. We dispose of our resources properly to prevent memory leaks

> **Important:** Always set `e.UpdateData = true` when you modify the frame bitmap. This signals the SDK to use your modified frame instead of the original.

### Adding Text Overlays

Text overlays are commonly used for timestamps, captions, or informational displays:

```csharp

using (Graphics g = Graphics.FromImage(e.Frame))

{

// Create a semi-transparent background for text

using (SolidBrush brush = new SolidBrush(Color.FromArgb(150, 0, 0, 0)))

{

g.FillRectangle(brush, 10, 10, 200, 30);

}

// Add text overlay

using (Font font = new Font("Arial", 12))

using (SolidBrush textBrush = new SolidBrush(Color.White))

{

g.DrawString(DateTime.Now.ToString(), font, textBrush, new PointF(15, 15));

}

e.UpdateData = true;

}

```

## Performance Considerations

When working with `OnVideoFrameBitmap`, it's crucial to optimize your code for performance. Each frame processing operation must complete quickly to maintain smooth video playback.

### Resource Management

Proper resource management is essential:

```csharp

// Poor performance approach

private void VideoProcessor_OnVideoFrameBitmap(object sender, VideoFrameBitmapEventArgs e)

{

Bitmap overlay = new Bitmap(@"c:\logo.png");

Graphics g = Graphics.FromImage(e.Frame);

g.DrawImage(overlay, 0, 0);

e.UpdateData = true;

// Memory leak! Graphics and Bitmap not disposed

}

// Optimized approach

private Bitmap _cachedOverlay;

private void InitializeResources()

{

_cachedOverlay = new Bitmap(@"c:\logo.png");

}

private void VideoProcessor_OnVideoFrameBitmap(object sender, VideoFrameBitmapEventArgs e)

{

using (Graphics g = Graphics.FromImage(e.Frame))

{

g.DrawImage(_cachedOverlay, 0, 0);

e.UpdateData = true;

}

}

private void CleanupResources()

{

_cachedOverlay?.Dispose();

}

```

### Optimizing Processing Time

To maintain smooth video playback:

1. **Pre-compute where possible**: Prepare resources before processing begins

2. **Cache frequently used objects**: Avoid creating new objects for each frame

3. **Process only when necessary**: Add conditional logic to skip frames or perform less intensive operations when needed

4. **Use efficient drawing operations**: Choose appropriate GDI+ methods based on your needs

```csharp

private void VideoProcessor_OnVideoFrameBitmap(object sender, VideoFrameBitmapEventArgs e)

{

// Only process every second frame

if (_frameCounter % 2 == 0)

{

using (Graphics g = Graphics.FromImage(e.Frame))

{

// Your frame processing code

e.UpdateData = true;

}

}

_frameCounter++;

}

```

## Advanced Frame Manipulation Techniques

### Applying Filters and Effects

You can implement custom image processing filters:

```csharp

private void ApplyGrayscaleFilter(Bitmap bitmap)

{

Rectangle rect = new Rectangle(0, 0, bitmap.Width, bitmap.Height);

BitmapData bmpData = bitmap.LockBits(rect, ImageLockMode.ReadWrite, bitmap.PixelFormat);

IntPtr ptr = bmpData.Scan0;

int bytes = Math.Abs(bmpData.Stride) * bitmap.Height;

byte[] rgbValues = new byte[bytes];

Marshal.Copy(ptr, rgbValues, 0, bytes);

// Process pixel data

for (int i = 0; i < rgbValues.Length; i += 4)

{

byte gray = (byte)(0.299 * rgbValues[i + 2] + 0.587 * rgbValues[i + 1] + 0.114 * rgbValues[i]);

rgbValues[i] = gray; // Blue

rgbValues[i + 1] = gray; // Green

rgbValues[i + 2] = gray; // Red

}

Marshal.Copy(rgbValues, 0, ptr, bytes);

bitmap.UnlockBits(bmpData);

}

```

## Integration with Computer Vision Libraries

The `OnVideoFrameBitmap` event can be combined with popular computer vision libraries:

```csharp

// Example using a hypothetical computer vision library

private void VideoProcessor_OnVideoFrameBitmap(object sender, VideoFrameBitmapEventArgs e)

{

// Convert bitmap to format needed by CV library

byte[] imageData = ConvertBitmapToByteArray(e.Frame);

// Process with CV library

var results = _computerVisionProcessor.DetectFaces(imageData, e.Frame.Width, e.Frame.Height);

// Draw results back onto frame

using (Graphics g = Graphics.FromImage(e.Frame))

{

foreach (var face in results)

{

g.DrawRectangle(new Pen(Color.Yellow, 2), face.X, face.Y, face.Width, face.Height);

}

e.UpdateData = true;

}

}

```

## Troubleshooting Common Issues

### Memory Leaks

If you experience memory growth during prolonged video processing:

1. Ensure all `Graphics` objects are disposed

2. Properly dispose of any temporary `Bitmap` objects

3. Avoid capturing large objects in lambda expressions

### Performance Degradation

If frame processing becomes sluggish:

1. Profile your event handler to identify bottlenecks

2. Consider reducing processing frequency

3. Optimize GDI+ operations or consider DirectX for performance-critical applications

## SDK Integration

The `OnVideoFrameBitmap` event is available in the following SDKs:

## Required Dependencies

To use the functionality described in this guide, you'll need:

- SDK redistribution package

- System.Drawing (included in .NET Framework)

- Windows GDI+ support

---

Visit our [GitHub](https://github.com/visioforge/.Net-SDK-s-samples) page to get more code samples and projects demonstrating these techniques in action.

---END OF PAGE---

# Local File: .\dotnet\general\code-samples\read-file-info.md

---

title: Reading Media File Information in C# for Developers

description: Learn how to extract detailed information from video and audio files in C# with step-by-step code examples. Discover how to access codecs, resolution, frame rate, bitrate, and metadata tags for building robust media applications.

sidebar_label: Reading Media File Information

---

# Reading Media File Information in C#

[!badge size="xl" target="blank" variant="info" text="Video Capture SDK .Net"](https://www.visioforge.com/video-capture-sdk-net) [!badge size="xl" target="blank" variant="info" text="Video Edit SDK .Net"](https://www.visioforge.com/video-edit-sdk-net) [!badge size="xl" target="blank" variant="info" text="Media Player SDK .Net"](https://www.visioforge.com/media-player-sdk-net)

## Introduction

Accessing detailed information embedded within media files is essential for developing sophisticated applications like media players, video editors, content management systems, and file analysis tools. Understanding properties such as codecs, resolution, frame rate, bitrate, duration, and embedded tags allows developers to build more intelligent and user-friendly software.

This guide demonstrates how to read comprehensive information from video and audio files using C# and the `MediaInfoReader` class. The techniques shown are applicable across various .NET projects and provide a foundation for handling media files programmatically.

## Why Extract Media File Information?

Media file information serves multiple purposes in application development:

- **User Experience**: Display technical details to users in media players

- **Compatibility Checks**: Verify if files meet required specifications

- **Automated Processing**: Configure encoding parameters based on source properties

- **Content Organization**: Catalog media libraries with accurate metadata

- **Quality Assessment**: Evaluate media files for potential issues

## Implementation Guide

Let's explore the process of extracting media file information in a step-by-step approach. The examples assume a WinForms application with a `TextBox` control named `mmInfo` for displaying the extracted information.

### Step 1: Initialize the Media Information Reader

The first step involves creating an instance of the `MediaInfoReader` class:

```csharp

// Import the necessary namespace

using VisioForge.Core.MediaInfo; // Namespace for MediaInfoReader

using VisioForge.Core.Helpers; // Namespace for TagLibHelper (optional)

// Create an instance of MediaInfoReader

var infoReader = new MediaInfoReader();

```

This initialization prepares the reader to process media files.

### Step 2: Verify File Playability (Optional)

Before diving into detailed analysis, it's often useful to check if the file is supported:

```csharp

// Define variables to hold potential error information

FilePlaybackError errorCode;

string errorText;

// Specify the path to the media file

string filename = @"C:\path\to\your\mediafile.mp4"; // Replace with your actual file path

// Check if the file is playable

if (MediaInfoReader.IsFilePlayable(filename, out errorCode, out errorText))

{

// Display success message

mmInfo.Text += "Status: This file appears to be playable." + Environment.NewLine;

}

else

{

// Display error message including the error code and description

mmInfo.Text += $"Status: This file might not be playable. Error: {errorCode} - {errorText}" + Environment.NewLine;

}

mmInfo.Text += "------------------------------------" + Environment.NewLine;

```

This verification provides early feedback on file integrity and compatibility.

### Step 3: Extract Detailed Stream Information

Now we can extract the rich metadata from the file:

```csharp

try

{

// Assign the filename to the reader

infoReader.Filename = filename;

// Read the file information (true for full analysis)

infoReader.ReadFileInfo(true);

// Process Video Streams

mmInfo.Text += $"Found {infoReader.VideoStreams.Count} video stream(s)." + Environment.NewLine;

for (int i = 0; i < infoReader.VideoStreams.Count; i++)

{

var stream = infoReader.VideoStreams[i];

mmInfo.Text += Environment.NewLine;

mmInfo.Text += $"--- Video Stream #{i + 1} ---" + Environment.NewLine;

mmInfo.Text += $" Codec: {stream.Codec}" + Environment.NewLine;

mmInfo.Text += $" Duration: {stream.Duration}" + Environment.NewLine;

mmInfo.Text += $" Dimensions: {stream.Width}x{stream.Height}" + Environment.NewLine;

mmInfo.Text += $" FOURCC: {stream.FourCC}" + Environment.NewLine;

if (stream.AspectRatio != null && stream.AspectRatio.Item1 > 0 && stream.AspectRatio.Item2 > 0)

{

mmInfo.Text += $" Aspect Ratio: {stream.AspectRatio.Item1}:{stream.AspectRatio.Item2}" + Environment.NewLine;

}

mmInfo.Text += $" Frame Rate: {stream.FrameRate:F2} fps" + Environment.NewLine;

mmInfo.Text += $" Bitrate: {stream.Bitrate / 1000.0:F0} kbps" + Environment.NewLine;

mmInfo.Text += $" Frames Count: {stream.FramesCount}" + Environment.NewLine;

}

// Process Audio Streams

mmInfo.Text += Environment.NewLine;

mmInfo.Text += $"Found {infoReader.AudioStreams.Count} audio stream(s)." + Environment.NewLine;

for (int i = 0; i < infoReader.AudioStreams.Count; i++)

{

var stream = infoReader.AudioStreams[i];

mmInfo.Text += Environment.NewLine;

mmInfo.Text += $"--- Audio Stream #{i + 1} ---" + Environment.NewLine;

mmInfo.Text += $" Codec: {stream.Codec}" + Environment.NewLine;

mmInfo.Text += $" Codec Info: {stream.CodecInfo}" + Environment.NewLine;

mmInfo.Text += $" Duration: {stream.Duration}" + Environment.NewLine;

mmInfo.Text += $" Bitrate: {stream.Bitrate / 1000.0:F0} kbps" + Environment.NewLine;

mmInfo.Text += $" Channels: {stream.Channels}" + Environment.NewLine;

mmInfo.Text += $" Sample Rate: {stream.SampleRate} Hz" + Environment.NewLine;

mmInfo.Text += $" Bits Per Sample (BPS): {stream.BPS}" + Environment.NewLine;

mmInfo.Text += $" Language: {stream.Language}" + Environment.NewLine;

}

// Process Subtitle Streams

mmInfo.Text += Environment.NewLine;

mmInfo.Text += $"Found {infoReader.Subtitles.Count} subtitle stream(s)." + Environment.NewLine;

for (int i = 0; i < infoReader.Subtitles.Count; i++)

{

var stream = infoReader.Subtitles[i];

mmInfo.Text += Environment.NewLine;

mmInfo.Text += $"--- Subtitle Stream #{i + 1} ---" + Environment.NewLine;

mmInfo.Text += $" Codec/Format: {stream.Codec}" + Environment.NewLine;

mmInfo.Text += $" Name: {stream.Name}" + Environment.NewLine;

mmInfo.Text += $" Language: {stream.Language}" + Environment.NewLine;

}

}

catch (Exception ex)

{

// Handle potential errors during file reading

mmInfo.Text += $"{Environment.NewLine}Error reading file info: {ex.Message}{Environment.NewLine}";

}

finally

{

// Important: Dispose the reader to release file handles and resources

infoReader.Dispose();

}

```

The code iterates through each collection (`VideoStreams`, `AudioStreams`, and `Subtitles`), extracting and displaying relevant information for every stream found.

### Step 4: Extract Metadata Tags

Beyond technical stream information, media files often contain metadata tags:

```csharp

// Read Metadata Tags

mmInfo.Text += Environment.NewLine + "--- Metadata Tags ---" + Environment.NewLine;

try

{

// Use TagLibHelper to read tags from the file

var tags = TagLibHelper.ReadTags(filename);

// Check if tags were successfully read

if (tags != null)

{

mmInfo.Text += $"Title: {tags.Title}" + Environment.NewLine;

mmInfo.Text += $"Artist(s): {string.Join(", ", tags.Performers ?? new string[0])}" + Environment.NewLine;

mmInfo.Text += $"Album: {tags.Album}" + Environment.NewLine;

mmInfo.Text += $"Year: {tags.Year}" + Environment.NewLine;

mmInfo.Text += $"Genre: {string.Join(", ", tags.Genres ?? new string[0])}" + Environment.NewLine;

mmInfo.Text += $"Comment: {tags.Comment}" + Environment.NewLine;

}

else

{

mmInfo.Text += "No standard metadata tags found or readable." + Environment.NewLine;

}

}

catch (Exception ex)

{

// Handle errors during tag reading

mmInfo.Text += $"Error reading tags: {ex.Message}" + Environment.NewLine;

}

```

## Best Practices for Media File Analysis

When implementing media file analysis in your applications, consider these best practices:

### Error Handling

Always wrap file operations in appropriate try-catch blocks. Media files can be corrupted, inaccessible, or in unexpected formats, which might cause exceptions.

```csharp

try {

// Media file operations

}

catch (Exception ex) {

// Log error and provide user feedback

}

```

### Resource Management

Properly dispose of objects that access file resources to prevent file locking issues:

```csharp

using (var infoReader = new MediaInfoReader())

{

// Use the reader

}

// Or manually in a finally block

try {

// Operations

}

finally {

infoReader.Dispose();

}

```

### Performance Considerations

For large media libraries, consider:

1. Implementing caching mechanisms for repeated analysis

2. Using background threads for processing to keep UI responsive

3. Limiting the depth of analysis for initial quick scans

## Required Components

For successful implementation, ensure your project includes the necessary dependencies as specified in the SDK documentation.

## Conclusion

Extracting information from media files is a powerful capability for developers building applications that work with audio and video content. With the techniques outlined in this guide, you can access detailed technical properties and metadata tags to enhance your application's functionality.

The `MediaInfoReader` class provides a convenient and efficient way to extract the necessary metadata, allowing you to build more sophisticated media handling features in your C# applications.

For more advanced scenarios, explore the full capabilities of the SDK and consult the detailed documentation. You can find additional code samples and examples on GitHub to further expand your media file processing capabilities.

---END OF PAGE---

# Local File: .\dotnet\general\code-samples\select-video-renderer-winforms.md

---

title: Video Renderer Selection Guide for .NET Applications

description: Learn how to implement and optimize video renderers in .NET applications using DirectShow-based SDK engines. This in-depth guide covers VideoRenderer, VMR9, and EVR with practical code examples for WinForms development.

sidebar_label: Select Video Renderer (WinForms)

---

# Video Renderer Selection Guide for WinForms Applications

[!badge size="xl" target="blank" variant="info" text="Video Capture SDK .Net"](https://www.visioforge.com/video-capture-sdk-net) [!badge size="xl" target="blank" variant="info" text="Video Edit SDK .Net"](https://www.visioforge.com/video-edit-sdk-net) [!badge size="xl" target="blank" variant="info" text="Media Player SDK .Net"](https://www.visioforge.com/media-player-sdk-net)

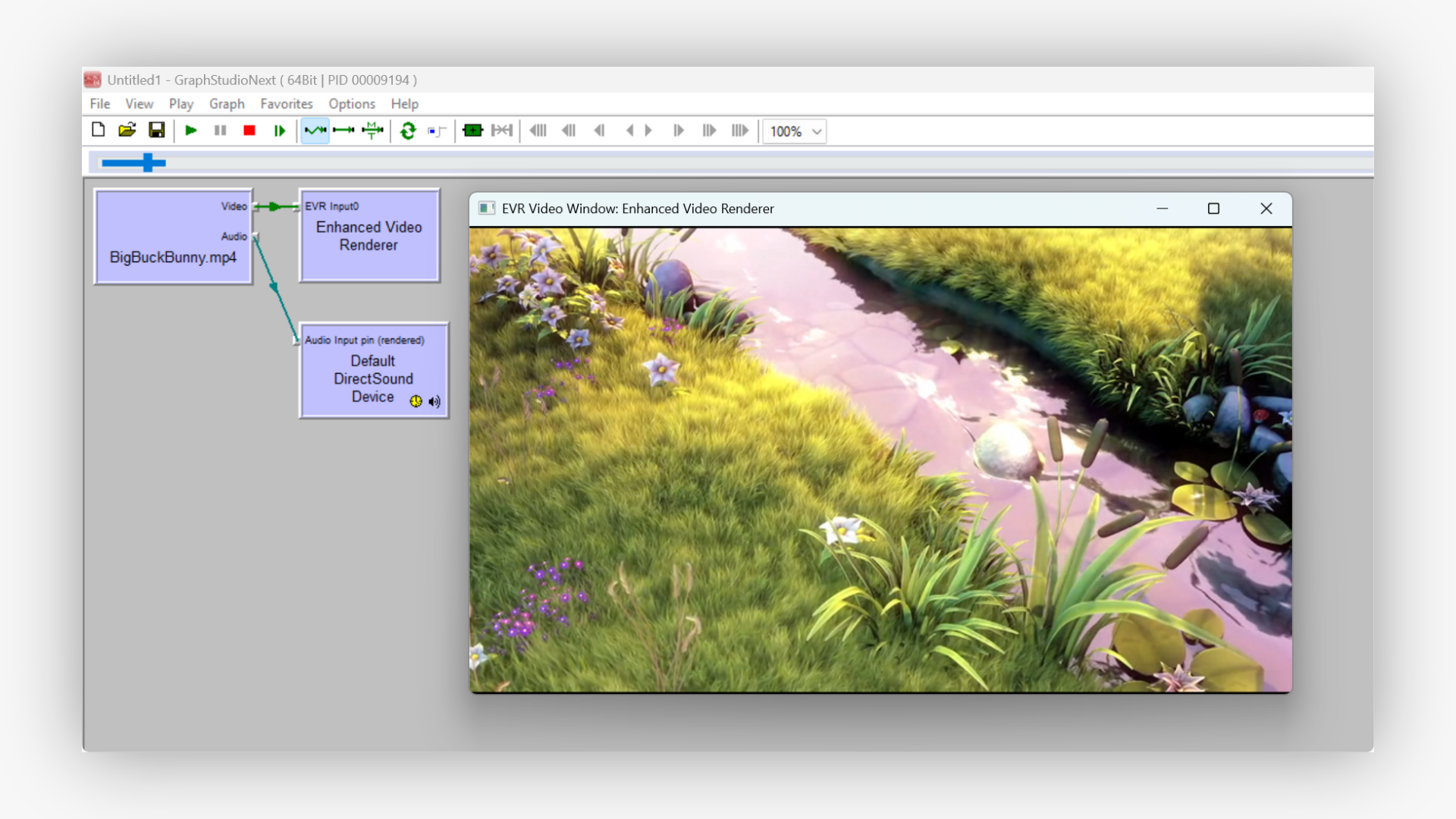

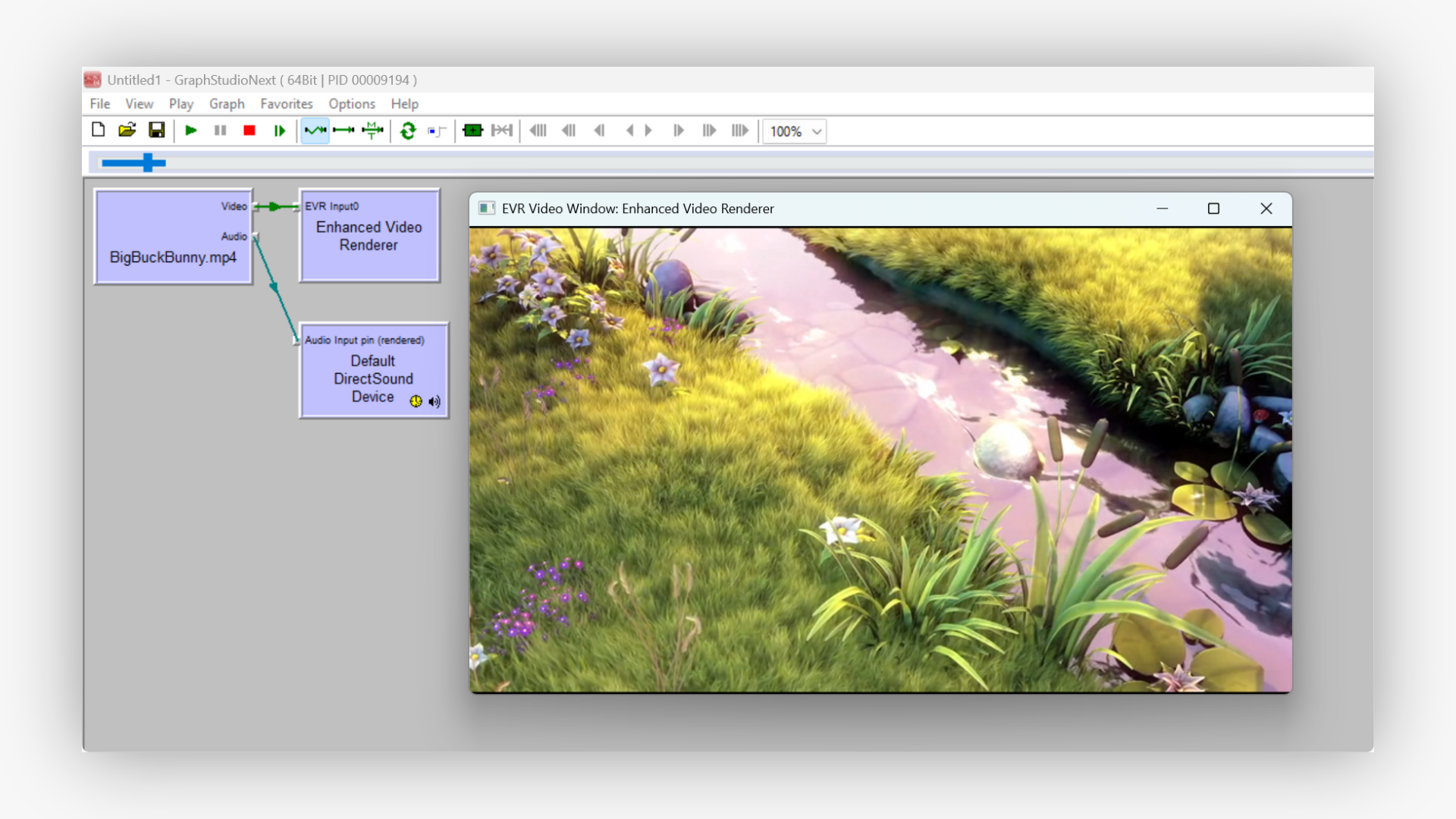

## Introduction to Video Rendering in .NET

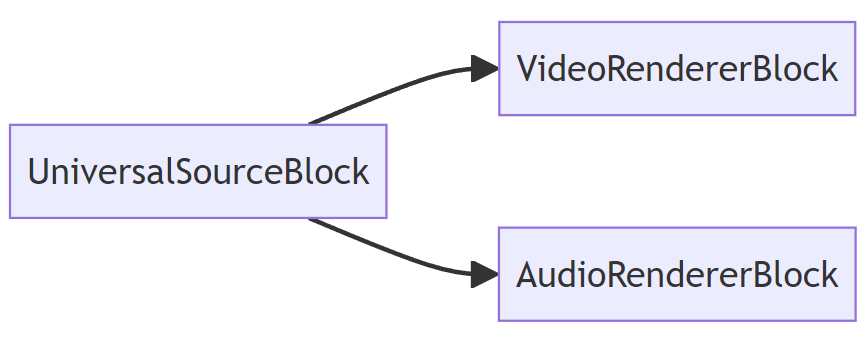

When developing multimedia applications in .NET, selecting the appropriate video renderer is crucial for optimal performance and compatibility. This guide focuses on DirectShow-based SDK engines: VideoCaptureCore, VideoEditCore, and MediaPlayerCore, which share the same API across all SDKs.

Video renderers serve as the bridge between your application and the display hardware, determining how video content is processed and presented to the user. The right choice can significantly impact performance, visual quality, and hardware resource utilization.

## Understanding Available Video Renderer Options

DirectShow in Windows offers three primary renderer options, each with distinct characteristics and use cases. Let's explore each renderer in detail to help you make an informed decision for your application.

### Legacy Video Renderer (GDI-based)

The Video Renderer is the oldest option in the DirectShow ecosystem. It relies on GDI (Graphics Device Interface) for drawing operations.

**Key characteristics:**

- Software-based rendering without hardware acceleration

- Compatible with older systems and configurations

- Lower performance ceiling compared to modern alternatives

- Simple implementation with minimal configuration options

**Implementation example:**

```cs

VideoCapture1.Video_Renderer.VideoRenderer = VideoRendererMode.VideoRenderer;

```

**When to use:**

- Compatibility is the primary concern

- Application targets older hardware or operating systems

- Minimal video processing requirements

- Troubleshooting issues with newer renderers

### Video Mixing Renderer 9 (VMR9)

VMR9 represents a significant improvement over the legacy renderer, introducing support for hardware acceleration and advanced features.

**Key characteristics:**

- Hardware-accelerated rendering through DirectX 9

- Support for multiple video streams mixing

- Advanced deinterlacing options

- Alpha blending and compositing capabilities

- Custom video effects processing

**Implementation example:**

```cs

VideoCapture1.Video_Renderer.VideoRenderer = VideoRendererMode.VMR9;

```

**When to use:**

- Modern applications requiring good performance

- Video editing or composition features are needed

- Multiple video stream scenarios

- Applications that need to balance performance and compatibility

### Enhanced Video Renderer (EVR)

EVR is the most advanced option, available in Windows Vista and later operating systems. It leverages the Media Foundation framework rather than pure DirectShow.

**Key characteristics:**

- Latest hardware acceleration technologies

- Superior video quality and performance

- Enhanced color space processing

- Better multi-monitor support

- More efficient CPU usage

- Improved synchronization mechanisms

**Implementation example:**

```cs

VideoCapture1.Video_Renderer.VideoRenderer = VideoRendererMode.EVR;

```

**When to use:**

- Modern applications targeting Windows Vista or later

- Maximum performance and quality are required

- Applications handling HD or 4K content

- When advanced synchronization is important

- Multiple display environments

## Advanced Configuration Options

Beyond just selecting a renderer, the SDK provides various configuration options to fine-tune video presentation.

### Working with Deinterlacing Modes

When displaying interlaced video content (common in broadcast sources), proper deinterlacing improves visual quality significantly. The SDK supports various deinterlacing algorithms depending on the renderer chosen.

First, retrieve the available deinterlacing modes:

```cs

VideoCapture1.Video_Renderer_Deinterlace_Modes_Fill();

// Populate a dropdown with available modes

foreach (string deinterlaceMode in VideoCapture1.Video_Renderer_Deinterlace_Modes())

{

cbDeinterlaceModes.Items.Add(deinterlaceMode);

}

```

Then apply a selected deinterlacing mode:

```cs

// Assuming the user selected a mode from cbDeinterlaceModes

string selectedMode = cbDeinterlaceModes.SelectedItem.ToString();

VideoCapture1.Video_Renderer.DeinterlaceMode = selectedMode;

VideoCapture1.Video_Renderer_Update();

```

VMR9 and EVR support various deinterlacing algorithms including:

- Bob (simple line doubling)

- Weave (field interleaving)

- Motion adaptive

- Motion compensated (highest quality)

The availability of specific algorithms depends on the video card capabilities and driver implementation.

### Managing Aspect Ratio and Stretch Modes

When displaying video in a window or control that doesn't match the source's native aspect ratio, you need to decide how to handle this discrepancy. The SDK provides multiple stretch modes to address different scenarios.

#### Stretch Mode

This mode stretches the video to fill the entire display area, potentially distorting the image:

```cs

VideoCapture1.Video_Renderer.StretchMode = VideoRendererStretchMode.Stretch;

VideoCapture1.Video_Renderer_Update();

```

**Use cases:**

- When aspect ratio is not critical

- Filling the entire display area is more important than proportions

- Source and display have similar aspect ratios

- User interface constraints require full-area usage

#### Letterbox Mode

This mode preserves the original aspect ratio by adding black borders as needed:

```cs

VideoCapture1.Video_Renderer.StretchMode = VideoRendererStretchMode.Letterbox;

VideoCapture1.Video_Renderer_Update();

```

**Use cases:**

- Maintaining correct proportions is essential

- Professional video applications

- Content where distortion would be noticeable or problematic

- Cinema or broadcast content viewing

#### Crop Mode

This mode fills the display area while preserving aspect ratio, potentially cropping some content:

```cs

VideoCapture1.Video_Renderer.StretchMode = VideoRendererStretchMode.Crop;

VideoCapture1.Video_Renderer_Update();

```

**Use cases:**

- Consumer video applications where filling the screen is preferred

- Content where edges are less important than center

- Social media-style video display

- When trying to eliminate letterboxing in already letterboxed content

### Performance Optimization Techniques

#### Adjusting Buffer Count

For smoother playback, especially with high-resolution content, adjusting the buffer count can help:

```cs

// Increase buffer count for smoother playback

VideoCapture1.Video_Renderer.BuffersCount = 3;

VideoCapture1.Video_Renderer_Update();

```

#### Enabling Hardware Acceleration

Ensure hardware acceleration is enabled for maximum performance:

```cs

// For VMR9

VideoCapture1.Video_Renderer.VMR9.UseOverlays = true;

VideoCapture1.Video_Renderer.VMR9.UseDynamicTextures = true;

// For EVR

VideoCapture1.Video_Renderer.EVR.EnableHardwareTransforms = true;

VideoCapture1.Video_Renderer_Update();

```

## Troubleshooting Common Issues

### Renderer Compatibility Problems

If you encounter issues with a specific renderer, try falling back to a more compatible option:

```cs

try

{

// Try using EVR first

VideoCapture1.Video_Renderer.VideoRenderer = VideoRendererMode.EVR;

VideoCapture1.Video_Renderer_Update();

}

catch

{

try

{

// Fall back to VMR9

VideoCapture1.Video_Renderer.VideoRenderer = VideoRendererMode.VMR9;

VideoCapture1.Video_Renderer_Update();

}

catch

{

// Last resort - legacy renderer

VideoCapture1.Video_Renderer.VideoRenderer = VideoRendererMode.VideoRenderer;

VideoCapture1.Video_Renderer_Update();

}

}

```

### Display Issues on Multi-Monitor Systems

For applications that might run on multi-monitor setups, additional configuration might be necessary:

```cs

// Specify which monitor to use for full-screen mode

VideoCapture1.Video_Renderer.MonitorIndex = 0; // Primary monitor

VideoCapture1.Video_Renderer_Update();

```

## Best Practices and Recommendations

1. **Choose the right renderer for your target environment**:

- For modern Windows: EVR

- For broad compatibility: VMR9

- For legacy systems: Video Renderer

2. **Test on various hardware configurations**: Video rendering can behave differently across GPU vendors and driver versions.

3. **Implement renderer fallback logic**: Always have a backup plan if the preferred renderer fails.

4. **Consider your video content**: Higher resolution or interlaced content will benefit more from advanced renderers.

5. **Balance quality vs. performance**: The highest quality settings might not always deliver the best user experience if they impact performance.

## Required Dependencies

To ensure proper functionality of these renderers, make sure to include:

- SDK redistributable packages

- DirectX End-User Runtime (latest version recommended)

- .NET Framework runtime appropriate for your application

## Conclusion

Selecting and configuring the right video renderer is an important decision in developing high-quality multimedia applications. By understanding the strengths and limitations of each renderer option, you can significantly improve the user experience of your WinForms applications.

The optimal choice depends on your specific requirements, target audience, and the nature of your video content. In most modern applications, EVR should be your first choice, with VMR9 as a reliable fallback option.

---

Visit our [GitHub](https://github.com/visioforge/.Net-SDK-s-samples) page to get more code samples.

---END OF PAGE---

# Local File: .\dotnet\general\code-samples\text-onvideoframebuffer.md

---

title: Text Overlay Implementation with OnVideoFrameBuffer

description: Learn how to create custom text overlays in video applications using the OnVideoFrameBuffer event in .NET video processing. This detailed guide with C# code examples shows you how to implement dynamic text elements on video frames for professional applications.

sidebar_label: Draw Text Overlay Using OnVideoFrameBuffer Event

---

# Creating Custom Text Overlays with OnVideoFrameBuffer in .NET

[!badge size="xl" target="blank" variant="info" text="Video Capture SDK .Net"](https://www.visioforge.com/video-capture-sdk-net) [!badge size="xl" target="blank" variant="info" text="Video Edit SDK .Net"](https://www.visioforge.com/video-edit-sdk-net) [!badge size="xl" target="blank" variant="info" text="Media Player SDK .Net"](https://www.visioforge.com/media-player-sdk-net)

## Introduction to Text Overlays in Video Processing

Adding text overlays to video content is a common requirement in many professional applications, from video editing software to security camera feeds, broadcasting tools, and educational applications. While the standard video effect APIs provide basic text overlay capabilities, developers often need more control over how text appears on video frames.

This guide demonstrates how to manually implement custom text overlays using the OnVideoFrameBuffer event available in VideoCaptureCore, VideoEditCore, and MediaPlayerCore engines. By intercepting video frames during processing, you can apply custom text and graphics with precise control over positioning, formatting, and animation.

## Understanding the OnVideoFrameBuffer Event

The OnVideoFrameBuffer event is a powerful hook that gives developers direct access to the video frame buffer during processing. This event fires for each frame of video, providing an opportunity to modify the frame data before it's displayed or encoded.

Key benefits of using OnVideoFrameBuffer for text overlays include:

- **Frame-level access**: Modify individual frames with pixel-perfect precision

- **Dynamic content**: Update text based on real-time data or timestamps

- **Custom styling**: Apply custom fonts, colors, and effects beyond what built-in APIs offer

- **Performance optimizations**: Implement efficient rendering techniques for high-performance applications

## Implementation Overview

The technique presented here uses the following components:

1. An event handler for OnVideoFrameBuffer that processes each video frame

2. A VideoEffectTextLogo object to define text properties

3. The FastImageProcessing API to render text onto the frame buffer

This approach is particularly useful when you need to:

- Display dynamic data like timestamps, metadata, or sensor readings

- Create animated text effects

- Position text with pixel-perfect accuracy

- Apply custom styling not available through standard APIs

## Sample Code Implementation

The following C# example demonstrates how to implement a basic text overlay system using the OnVideoFrameBuffer event:

```cs

private void SDK_OnVideoFrameBuffer(object sender, VideoFrameBufferEventArgs e)

{

if (!logoInitiated)

{

logoInitiated = true;

InitTextLogo();

}

FastImageProcessing.AddTextLogo(null, e.Frame.Data, e.Frame.Width, e.Frame.Height, ref textLogo, e.Timestamp, 0);

}

private bool logoInitiated = false;

private VideoEffectTextLogo textLogo = null;

private void InitTextLogo()

{

textLogo = new VideoEffectTextLogo(true);

textLogo.Text = "Hello world!";

textLogo.Left = 50;

textLogo.Top = 50;

}

```

## Detailed Code Explanation

Let's break down the key components of this implementation:

### The Event Handler

```cs

private void SDK_OnVideoFrameBuffer(object sender, VideoFrameBufferEventArgs e)

```

This method is triggered for each video frame. The VideoFrameBufferEventArgs provides access to:

- Frame data (pixel buffer)

- Frame dimensions (width and height)

- Timestamp information

### Initialization Logic

```cs

if (!logoInitiated)

{

logoInitiated = true;

InitTextLogo();

}

```

This code ensures the text logo is only initialized once, preventing unnecessary object creation for each frame. This pattern is important for performance when processing video at high frame rates.

### Text Logo Setup

```cs

private void InitTextLogo()

{

textLogo = new VideoEffectTextLogo(true);

textLogo.Text = "Hello world!";

textLogo.Left = 50;

textLogo.Top = 50;

}

```

The VideoEffectTextLogo class is used to define the properties of the text overlay:

- The text content ("Hello world!")

- Position coordinates (50 pixels from both left and top)

### Rendering the Text Overlay

```cs

FastImageProcessing.AddTextLogo(null, e.Frame.Data, e.Frame.Width, e.Frame.Height, ref textLogo, e.Timestamp, 0);

```

This line does the actual work of rendering the text onto the frame:

- It takes the frame data buffer as input

- Uses the frame dimensions to properly position the text

- References the textLogo object containing text properties

- Can utilize the timestamp for dynamic content

## Advanced Customization Options

While the basic example demonstrates a simple static text overlay, the VideoEffectTextLogo class supports numerous customization options:

### Text Formatting

```cs

textLogo.FontName = "Arial";

textLogo.FontSize = 24;

textLogo.FontBold = true;

textLogo.FontItalic = false;

textLogo.Color = System.Drawing.Color.White;

textLogo.Opacity = 0.8f;

```

### Background and Borders

```cs

textLogo.BackgroundEnabled = true;

textLogo.BackgroundColor = System.Drawing.Color.Black;

textLogo.BackgroundOpacity = 0.5f;

textLogo.BorderEnabled = true;

textLogo.BorderColor = System.Drawing.Color.Yellow;

textLogo.BorderThickness = 2;

```

### Animation and Dynamic Content

For dynamic content that changes per frame:

```cs

private void SDK_OnVideoFrameBuffer(object sender, VideoFrameBufferEventArgs e)

{

if (!logoInitiated)

{

logoInitiated = true;

InitTextLogo();

}

// Update text based on timestamp

textLogo.Text = $"Timestamp: {e.Timestamp.ToString("HH:mm:ss.fff")}";

// Animate position

textLogo.Left = 50 + (int)(Math.Sin(e.Timestamp.TotalSeconds) * 50);

FastImageProcessing.AddTextLogo(null, e.Frame.Data, e.Frame.Width, e.Frame.Height, ref textLogo, e.Timestamp, 0);

}

```

## Performance Considerations

When implementing custom text overlays, consider these performance best practices:

1. **Initialize objects once**: Create the VideoEffectTextLogo object only once, not per frame

2. **Minimize text changes**: Update text content only when necessary

3. **Use efficient fonts**: Simple fonts render faster than complex ones

4. **Consider resolution**: Higher resolution videos require more processing power

5. **Test on target hardware**: Ensure your implementation performs well on production systems

## Multiple Text Elements

To display multiple text elements on the same frame:

```cs

private VideoEffectTextLogo titleLogo = null;

private VideoEffectTextLogo timestampLogo = null;

private void InitTextLogos()

{

titleLogo = new VideoEffectTextLogo(true);

titleLogo.Text = "Camera Feed";

titleLogo.Left = 50;

titleLogo.Top = 50;

timestampLogo = new VideoEffectTextLogo(true);

timestampLogo.Left = 50;

timestampLogo.Top = 100;

}

private void SDK_OnVideoFrameBuffer(object sender, VideoFrameBufferEventArgs e)

{

if (!logosInitiated)

{

logosInitiated = true;

InitTextLogos();

}

// Update dynamic content

timestampLogo.Text = e.Timestamp.ToString("yyyy-MM-dd HH:mm:ss.fff");

// Render both text elements

FastImageProcessing.AddTextLogo(null, e.Frame.Data, e.Frame.Width, e.Frame.Height, ref titleLogo, e.Timestamp, 0);

FastImageProcessing.AddTextLogo(null, e.Frame.Data, e.Frame.Width, e.Frame.Height, ref timestampLogo, e.Timestamp, 0);

}

```

## Required Components

To implement this solution, you'll need:

- SDK redist package installed in your application

- Reference to the appropriate SDK (.NET Video Capture, Video Edit, or Media Player)

- Basic understanding of video frame processing concepts

## Conclusion

The OnVideoFrameBuffer event provides a powerful mechanism for implementing custom text overlays in video applications. By directly accessing the frame buffer, developers can create sophisticated text effects with precise control over appearance and behavior.

This approach is particularly valuable when standard text overlay APIs don't provide the flexibility or features required for your application. With the techniques demonstrated in this guide, you can implement professional-quality text overlays for a wide range of video processing scenarios.

---

Visit our [GitHub](https://github.com/visioforge/.Net-SDK-s-samples) page to get more code samples.

---END OF PAGE---

# Local File: .\dotnet\general\code-samples\uninstall-directshow-filter.md

---

title: Uninstalling DirectShow Filters in Windows Applications

description: Learn how to properly uninstall DirectShow filters from your system using multiple methods. This guide explains manual uninstallation techniques, troubleshooting steps, and best practices for .NET developers working with multimedia applications.

sidebar_label: Uninstall DirectShow Filters

---

# Uninstalling DirectShow Filters in Windows Applications

[!badge size="xl" target="blank" variant="info" text="Video Capture SDK .Net"](https://www.visioforge.com/video-capture-sdk-net) [!badge size="xl" target="blank" variant="info" text="Video Edit SDK .Net"](https://www.visioforge.com/video-edit-sdk-net) [!badge size="xl" target="blank" variant="info" text="Media Player SDK .Net"](https://www.visioforge.com/media-player-sdk-net)

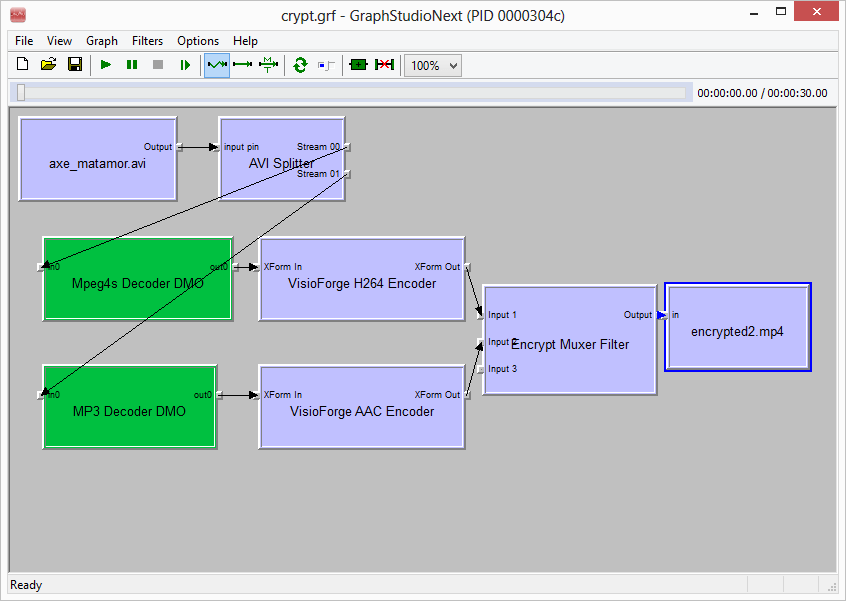

DirectShow filters are essential components for multimedia applications in Windows environments. They enable software to process audio and video data efficiently. However, there may be situations where you need to uninstall these filters, such as when upgrading your application, resolving conflicts, or completely removing a software package. This guide provides detailed instructions on how to properly uninstall DirectShow filters from your system.

## Understanding DirectShow Filters

DirectShow is a multimedia framework and API designed by Microsoft for software developers to perform various operations with media files. It's built on the Component Object Model (COM) architecture and uses a modular approach where each processing step is handled by a separate component called a filter.

Filters are categorized into three main types:

- **Source filters**: Read data from files, capture devices, or network streams

- **Transform filters**: Process or modify the data (compression, decompression, effects)

- **Rendering filters**: Display video or play audio

When SDK components are installed, they register DirectShow filters in the Windows Registry, making them available to any application that uses the DirectShow framework.

## Why Uninstall DirectShow Filters?

There are several reasons why you might need to uninstall DirectShow filters:

1. **Version conflicts**: Newer versions of the SDK might require removing older filters

2. **System cleanup**: Removing unused components to maintain system efficiency

3. **Troubleshooting**: Resolving issues with multimedia applications

4. **Complete software removal**: Ensuring no components remain after uninstalling the main application

5. **Re-registration**: Sometimes uninstalling and reinstalling filters can resolve registration issues

## Methods for Uninstalling DirectShow Filters

### Method 1: Using the SDK Installer (Recommended)

The most straightforward way to uninstall DirectShow filters is through the SDK (or redist) installer itself. SDK packages include uninstallation routines that properly remove all components, including DirectShow filters.

### Method 2: Manual Unregistration with regsvr32

If automatic uninstallation isn't possible or you need to unregister specific filters, you can use the `regsvr32` command-line tool:

1. Open Command Prompt as Administrator (right-click on Command Prompt and select "Run as administrator")

2. Use the following command syntax to unregister a filter:

```cmd

regsvr32 /u "C:\path\to\filter.dll"

```

3. Replace `C:\path\to\filter.dll` with the actual path to the DirectShow filter file

4. Press Enter to execute the command

For example, to unregister a filter located at `C:\Program Files\Common Files\FilterFolder\example_filter.dll`, you would use:

```cmd

regsvr32 /u "C:\Program Files\Common Files\FilterFolder\example_filter.dll"

```

You should see a confirmation dialog indicating successful unregistration.

## Finding DirectShow Filter Locations

Before you can manually unregister filters, you need to know their locations. Here are several methods to find installed DirectShow filters:

### Using GraphStudio

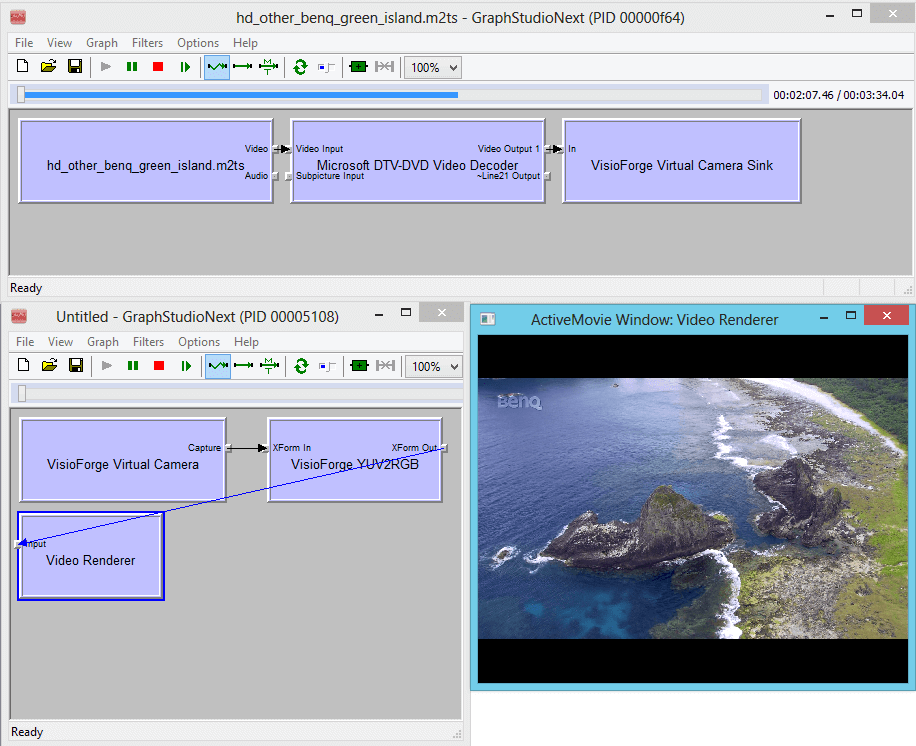

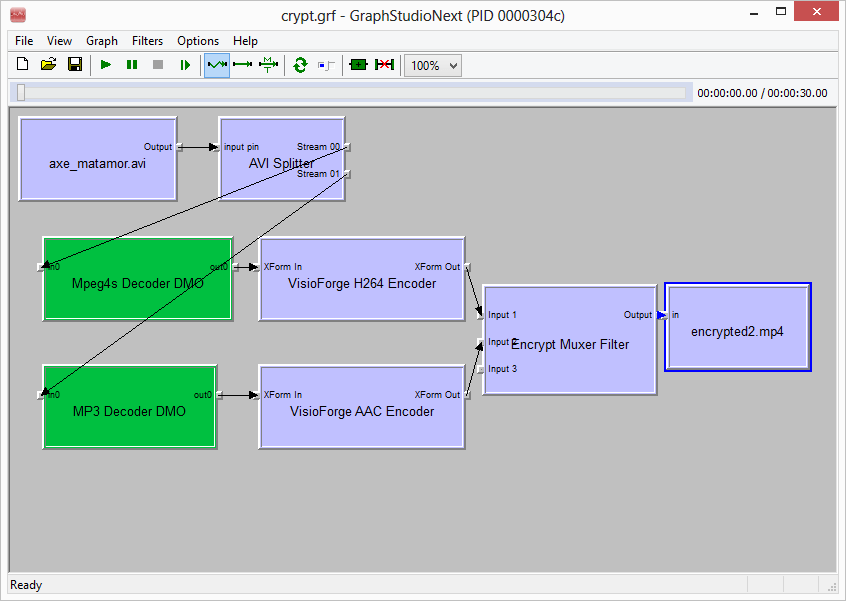

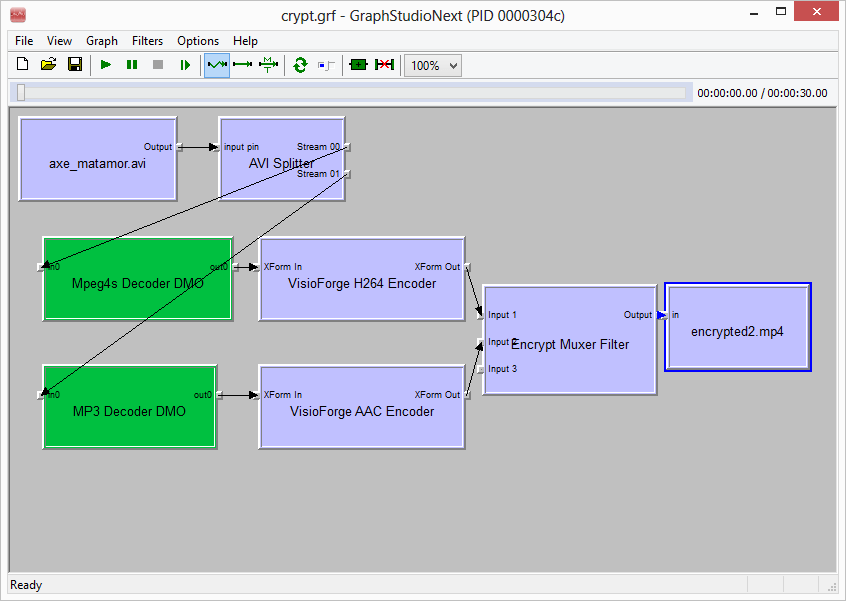

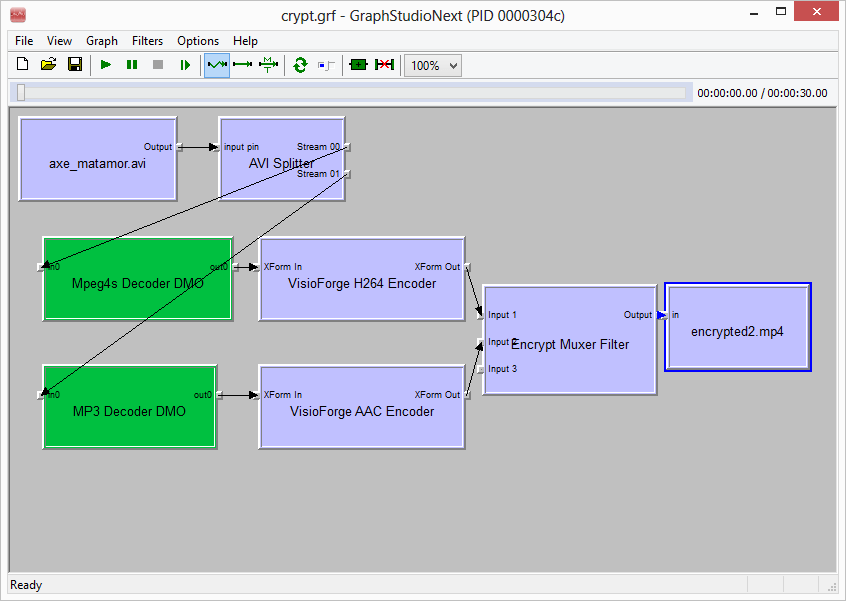

[GraphStudio](https://github.com/cplussharp/graph-studio-next) is a powerful open-source tool for working with DirectShow filters. To find filter locations:

1. Download and install GraphStudio

2. Launch the application with administrator privileges

3. Go to "Graph > Insert Filters"

4. Browse through the list of installed filters

5. Right-click on a filter and select "Properties"

6. Note the "File:" path shown in the properties dialog

This method provides the exact file path needed for manual unregistration.

### Using System Registry

You can also find DirectShow filters through the Windows Registry:

1. Press `Win + R` to open the Run dialog

2. Type `regedit` and press Enter to open Registry Editor

3. Navigate to `HKEY_CLASSES_ROOT\CLSID`

4. Use the Search function (Ctrl+F) to find filter names

5. Look for the "InprocServer32" key under the filter's CLSID, which contains the file path

## Platform Considerations (x86 vs x64)

DirectShow filters are platform-specific, meaning 32-bit (x86) and 64-bit (x64) versions are separate components. If you've installed both versions, you need to unregister each one separately.

For x64 systems:

- 64-bit filters are typically installed in `C:\Windows\System32`

- 32-bit filters are typically installed in `C:\Windows\SysWOW64`

Use the appropriate version of `regsvr32` for each platform:

- For 64-bit filters: `C:\Windows\System32\regsvr32.exe`

- For 32-bit filters: `C:\Windows\SysWOW64\regsvr32.exe`

## Troubleshooting Filter Uninstallation

If you encounter issues during filter uninstallation, try these troubleshooting steps:

### Unable to Unregister Filter

If you receive an error like "DllUnregisterServer failed with error code 0x80004005":

1. Ensure you're running Command Prompt as Administrator

2. Verify that the path to the filter is correct

3. Check if the filter file exists and isn't in use by any application

4. Close any applications that might be using DirectShow filters

5. In some cases, a system restart may be necessary before unregistration

### Filter Still Present After Unregistration

If a filter appears to be still registered after attempting to unregister it:

1. Use GraphStudio to check if the filter is still listed

2. Look for multiple instances of the filter in different locations

3. Check both 32-bit and 64-bit registry locations

4. Try using the Microsoft-provided tool "OleView" to inspect COM registrations

## Verifying Successful Uninstallation

After uninstalling DirectShow filters, verify the removal was successful:

1. Use GraphStudio to check if the filters no longer appear in the available filters list

2. Check the registry for any remaining entries related to the filters

3. Test any applications that previously used the filters to ensure they handle the absence gracefully

---

Visit our [GitHub](https://github.com/visioforge/.Net-SDK-s-samples) page to get more code samples and implementation examples for working with DirectShow and multimedia applications in .NET.

---END OF PAGE---

# Local File: .\dotnet\general\code-samples\video-view-set-custom-image.md

---

title: Setting Custom Images for VideoView in .NET SDKs

description: Learn how to implement custom image display in VideoView controls when no video is playing. This detailed guide includes code examples, troubleshooting tips, and best practices for .NET developers working with video display components.

sidebar_label: Setting Custom Image for VideoView

---

# Setting Custom Images for VideoView Controls in .NET Applications

[!badge size="xl" target="blank" variant="info" text="Video Capture SDK .Net"](https://www.visioforge.com/video-capture-sdk-net) [!badge size="xl" target="blank" variant="info" text="Video Edit SDK .Net"](https://www.visioforge.com/video-edit-sdk-net) [!badge size="xl" target="blank" variant="info" text="Media Player SDK .Net"](https://www.visioforge.com/media-player-sdk-net)

## Introduction

When developing media applications in .NET, it's often necessary to display a custom image within your VideoView control when no video content is playing. This capability is essential for creating professional-looking applications that maintain visual appeal during inactive states. Custom images can serve as placeholders, branding opportunities, or informational displays to enhance the user experience.

This guide explores the implementation of custom image functionality for VideoView controls across various .NET SDK applications.

## Understanding VideoView Custom Images

The VideoView control is a versatile component that displays video content in your application. However, when the control is not actively playing video, it typically shows a blank or default display. By implementing custom images, you can:

- Display your application or company logo

- Show preview thumbnails of available content

- Present instructional information to users

- Maintain visual consistency across your application

- Indicate the video's status (paused, stopped, loading, etc.)

It's important to note that the custom image is only visible when the control is not playing any video content. Once playback begins, the video stream automatically replaces the custom image.

## Implementation Process

The process of setting a custom image for a VideoView control involves three primary operations:

1. Creating a picture box with appropriate dimensions

2. Setting the desired image

3. Cleaning up resources when no longer needed

Let's explore each of these steps in detail.

## Step 1: Creating the Picture Box

The first step is to initialize a picture box within your VideoView control with the appropriate dimensions. This operation should be performed once during the setup phase:

```csharp

VideoView1.PictureBoxCreate(VideoView1.Width, VideoView1.Height);

```

This method call creates an internal picture box component that will host your custom image. The parameters specify the width and height of the picture box, which should typically match the dimensions of your VideoView control to ensure proper display without stretching or distortion.

### Best Practices for Picture Box Creation

- **Timing Considerations**: Create the picture box during form initialization or after the control has been sized appropriately

- **Dynamic Sizing**: If your application supports resizing, consider recreating the picture box when the control size changes

- **Error Handling**: Implement try-catch blocks to handle potential exceptions during creation

## Step 2: Setting the Custom Image

After creating the picture box, you can set your custom image. Note that there appears to be a duplication in the original documentation - the correct code for setting the image should use the `PictureBoxSetImage` method:

```csharp

// Load an image from a file

Image customImage = Image.FromFile("path/to/your/image.jpg");

VideoView1.PictureBoxSetImage(customImage);

```

Alternatively, you can use built-in resources or dynamically generated images:

```csharp

// Using a resource image

VideoView1.PictureBoxSetImage(Properties.Resources.MyCustomImage);

// Or creating a dynamic image

using (Bitmap dynamicImage = new Bitmap(VideoView1.Width, VideoView1.Height))

{

using (Graphics g = Graphics.FromImage(dynamicImage))

{

// Draw on the image

g.Clear(Color.DarkBlue);

g.DrawString("Ready to Play", new Font("Arial", 24), Brushes.White, new PointF(50, 50));

}

VideoView1.PictureBoxSetImage(dynamicImage.Clone() as Image);

}

```

### Image Format Considerations

The image format you choose can impact performance and visual quality:

- **PNG**: Best for images with transparency

- **JPEG**: Suitable for photographic content

- **BMP**: Uncompressed format with higher memory usage

- **GIF**: Supports simple animations but with limited color depth

### Image Size Optimization

For optimal performance, consider these factors when preparing your custom images:

1. **Match Dimensions**: Resize your image to match the VideoView dimensions to avoid scaling operations

2. **Resolution Awareness**: Consider display DPI for crisp images on high-resolution displays

3. **Memory Consumption**: Large images consume more memory, which may impact application performance

## Step 3: Cleaning Up Resources

When the custom image is no longer required, it's important to clean up the resources to prevent memory leaks:

```csharp

VideoView1.PictureBoxDestroy();

```

This method should be called when:

- The application is closing

- The control is being disposed

- You're switching to video playback mode and won't need the custom image anymore

### Resource Management Best Practices

Proper resource management is crucial for maintaining application stability:

- **Explicit Cleanup**: Always call `PictureBoxDestroy()` when you're done with the custom image

- **Disposal Timing**: Include the cleanup call in your form's `Dispose` or `Closing` events

- **State Tracking**: Keep track of whether a picture box has been created to avoid destroying a non-existent resource

## Advanced Scenarios

### Dynamic Image Updates

In some applications, you may need to update the custom image dynamically:

```csharp

private void UpdateCustomImage(string imagePath)

{

// Ensure picture box exists

if (VideoView1.PictureBoxExists())

{

// Update image

Image newImage = Image.FromFile(imagePath);

VideoView1.PictureBoxSetImage(newImage);

}

else

{

// Create picture box first

VideoView1.PictureBoxCreate(VideoView1.Width, VideoView1.Height);

Image newImage = Image.FromFile(imagePath);

VideoView1.PictureBoxSetImage(newImage);

}

}

```

### Handling Control Resizing

If your application allows resizing of the VideoView control, you'll need to handle image scaling:

```csharp

private void VideoView1_SizeChanged(object sender, EventArgs e)

{

// Recreate picture box with new dimensions

if (VideoView1.PictureBoxExists())

{

VideoView1.PictureBoxDestroy();

}

VideoView1.PictureBoxCreate(VideoView1.Width, VideoView1.Height);

// Set image again with appropriate scaling

SetScaledCustomImage();

}

```

### Multiple VideoView Controls

When working with multiple VideoView controls, ensure proper management for each:

```csharp

private void InitializeAllVideoViews()

{

// Initialize each VideoView with appropriate custom images

VideoView1.PictureBoxCreate(VideoView1.Width, VideoView1.Height);

VideoView1.PictureBoxSetImage(Properties.Resources.Camera1Placeholder);

VideoView2.PictureBoxCreate(VideoView2.Width, VideoView2.Height);

VideoView2.PictureBoxSetImage(Properties.Resources.Camera2Placeholder);

// Additional VideoView controls...

}

```

## Troubleshooting Common Issues

### Image Not Displaying

If your custom image isn't appearing:

1. **Check Timing**: Ensure you're setting the image after the picture box is created

2. **Verify Video State**: Confirm the control isn't currently playing video

3. **Image Loading**: Verify the image path is correct and accessible

4. **Control Visibility**: Ensure the VideoView control is visible in the UI

### Memory Leaks

To prevent memory leaks:

1. **Dispose Images**: Always dispose Image objects after they're no longer needed

2. **Destroy Picture Box**: Call `PictureBoxDestroy()` when appropriate

3. **Resource Tracking**: Implement proper tracking of created resources

## Complete Implementation Example

Here's a complete implementation example that demonstrates the proper lifecycle management:

```csharp

public partial class VideoPlayerForm : Form

{

private bool isPictureBoxCreated = false;

public VideoPlayerForm()

{

InitializeComponent();

this.Load += VideoPlayerForm_Load;

this.FormClosing += VideoPlayerForm_FormClosing;

}

private void VideoPlayerForm_Load(object sender, EventArgs e)

{

InitializeCustomImage();

}

private void InitializeCustomImage()

{

try

{

VideoView1.PictureBoxCreate(VideoView1.Width, VideoView1.Height);

isPictureBoxCreated = true;

using (Image customImage = Properties.Resources.VideoPlaceholder)

{

VideoView1.PictureBoxSetImage(customImage);

}

}

catch (Exception ex)

{

// Handle exceptions

MessageBox.Show($"Error setting custom image: {ex.Message}");

}

}

private void btnPlay_Click(object sender, EventArgs e)

{

// Play video logic here

// The custom image will automatically be replaced during playback

}

private void VideoPlayerForm_FormClosing(object sender, FormClosingEventArgs e)

{

CleanupResources();

}

private void CleanupResources()

{

if (isPictureBoxCreated)

{

VideoView1.PictureBoxDestroy();

isPictureBoxCreated = false;

}

}

}

```

## Conclusion

Implementing custom images for VideoView controls enhances the user experience and professional appearance of your .NET media applications. By following the steps outlined in this guide, you can effectively display branded or informative content when videos aren't playing.

Remember the key points:

1. Create the picture box with the appropriate dimensions

2. Set your custom image with proper resource management

3. Clean up resources when they're no longer needed

4. Handle resizing and other special scenarios as required

With these techniques, you can create more polished and user-friendly video applications in .NET.

---

Visit our [GitHub](https://github.com/visioforge/.Net-SDK-s-samples) page to get more code samples and implementation examples.

---END OF PAGE---

# Local File: .\dotnet\general\code-samples\vu-meters.md

---

title: Implementing Audio VU Meters & Waveform Visualizers

description: Complete guide to implementing VU meters and waveform visualizers in .NET applications. Learn how to display real-time audio levels with WinForms and WPF, including code examples for mono and stereo channel visualization.

sidebar_label: VU Meter and Waveform Painter

---

# Audio Visualization: Implementing VU Meters and Waveform Displays in .NET

[!badge size="xl" target="blank" variant="info" text="Video Capture SDK .Net"](https://www.visioforge.com/video-capture-sdk-net) [!badge size="xl" target="blank" variant="info" text="Video Edit SDK .Net"](https://www.visioforge.com/video-edit-sdk-net) [!badge size="xl" target="blank" variant="info" text="Media Player SDK .Net"](https://www.visioforge.com/media-player-sdk-net)

Audio visualization is a crucial component of modern media applications, providing users with visual feedback about audio levels and waveform patterns. This guide demonstrates how to implement VU (Volume Unit) meters and waveform visualizers in both WinForms and WPF applications.

## Understanding Audio Visualization Components

Before diving into implementation, it's important to understand the two main visualization tools we'll be working with:

### VU Meters

VU meters display the instantaneous audio level of a signal, typically showing how loud the audio is at any given moment. They provide real-time feedback about audio levels, helping users monitor signal strength and prevent distortion or clipping.

### Waveform Painters

Waveform visualizers display the audio signal as a continuous line that represents amplitude changes over time. They provide a more detailed representation of the audio content, showing patterns and characteristics that might not be apparent from listening alone.

## Implementation in WinForms Applications

WinForms provides a straightforward way to implement audio visualization components with minimal code. Let's explore the implementation of both VU meters and waveform painters.

### WinForms VU Meter Implementation

Implementing a VU meter in WinForms requires just a few steps:

1. **Add the VU Meter Control**: First, add the VU meter control to your form. For stereo audio, you'll typically add two controls—one for each channel.

```cs

// Add this to your form design

VisioForge.Core.UI.WinForms.VolumeMeterPro.VolumeMeter volumeMeter1;

VisioForge.Core.UI.WinForms.VolumeMeterPro.VolumeMeter volumeMeter2; // For stereo

```

2. **Enable VU Meter in Your Media Control**: Before starting playback or capture, enable the VU meter functionality in your media control.

```cs

// Enable VU meter before starting playback/capture

mediaPlayer.Audio_VUMeterPro_Enabled = true;

```

3. **Implement the Event Handler**: Add an event handler to process the audio level data and update the VU meter display.

```cs

private void VideoCapture1_OnAudioVUMeterProVolume(object sender, AudioLevelEventArgs e)

{

volumeMeter1.Amplitude = e.ChannelLevelsDb[0];

if (e.ChannelLevelsDb.Length > 1)

{

volumeMeter2.Amplitude = e.ChannelLevelsDb[1];

}

}

```

With these steps, your VU meter will dynamically update based on the audio levels of your media playback or capture.

### WinForms Waveform Painter Implementation

The waveform painter implementation follows a similar pattern:

1. **Add the Waveform Painter Control**: Add the waveform painter control to your form. For stereo audio, add two controls.

```cs

// Add this to your form design

VisioForge.Core.UI.WinForms.VolumeMeterPro.WaveformPainter waveformPainter1;

VisioForge.Core.UI.WinForms.VolumeMeterPro.WaveformPainter waveformPainter2; // For stereo

```

2. **Enable VU Meter Processing**: Enable the VU meter functionality to provide data for the waveform painter.

```cs

// Enable VU meter before starting playback/capture

mediaPlayer.Audio_VUMeter_Pro_Enabled = true;

```

3. **Implement the Event Handler**: Add an event handler to process the audio data and update the waveform display.

```cs

private void VideoCapture1_OnAudioVUMeterProVolume(object sender, AudioLevelEventArgs e)

{

waveformPainter1.AddMax(e.ChannelLevelsDb[0]);

if (e.ChannelLevelsDb.Length > 1)

{

waveformPainter2.AddMax(e.ChannelLevelsDb[1]);

}

}

```

## Implementation in WPF Applications

WPF requires a slightly different approach due to its threading model and UI framework. Let's look at how to implement both visualization types in WPF.

### WPF VU Meter Implementation

1. **Add the VU Meter Control**: Add the VU meter control to your XAML layout. For stereo audio, add two controls.

```xml

```

2. **Enable VU Meter Processing and Start the Meters**:

```cs

VideoCapture1.Audio_VUMeter_Pro_Enabled = true;

volumeMeter1.Start();

volumeMeter2.Start();

```

3. **Implement the Event Handler with Dispatcher**: In WPF, you need to use the Dispatcher to update UI elements from non-UI threads.

```cs

private delegate void AudioVUMeterProVolumeDelegate(AudioLevelEventArgs e);

private void AudioVUMeterProVolumeDelegateMethod(AudioLevelEventArgs e)

{

volumeMeter1.Amplitude = e.ChannelLevelsDb[0];

volumeMeter1.Update();

if (e.ChannelLevelsDb.Length > 1)

{

volumeMeter2.Amplitude = e.ChannelLevelsDb[1];

volumeMeter2.Update();

}

}

private void VideoCapture1_OnAudioVUMeterProVolume(object sender, AudioLevelEventArgs e)

{

Dispatcher.BeginInvoke(new AudioVUMeterProVolumeDelegate(AudioVUMeterProVolumeDelegateMethod), e);

}

```

4. **Clean Up After Playback**: When playback stops, clean up the VU meters to release resources.

```cs

volumeMeter1.Stop();

volumeMeter1.Clear();

volumeMeter2.Stop();

volumeMeter2.Clear();

```

### WPF Waveform Painter Implementation

1. **Add the Waveform Painter Control**: Add the waveform painter control to your XAML layout.

```xml

```

2. **Enable VU Meter Processing and Start the Waveform Painter**:

```cs

VideoCapture1.Audio_VUMeter_Pro_Enabled = true;

waveformPainter.Start();

```

3. **Implement the Maximum Calculated Event Handler**: For waveform painters in WPF, we use a different event.

```cs

private delegate void AudioVUMeterProMaximumCalculatedDelegate(VUMeterMaxSampleEventArgs e);

private void AudioVUMeterProMaximumCalculatedelegateMethod(VUMeterMaxSampleEventArgs e)

{

waveformPainter.AddValue(e.MaxSample, e.MinSample);

}

private void VideoCapture1_OnAudioVUMeterProMaximumCalculated(object sender, VUMeterMaxSampleEventArgs e)

{

Dispatcher.BeginInvoke(new AudioVUMeterProMaximumCalculatedDelegate(AudioVUMeterProMaximumCalculatedelegateMethod), e);

}

```

4. **Clean Up After Playback**: When playback stops, clean up the waveform painter.

```cs

waveformPainter.Stop();

waveformPainter.Clear();

```

## Advanced Customization Options

Both the VU meter and waveform painter controls offer extensive customization options to match your application's design and user experience requirements.

### Customizing VU Meters

You can customize various aspects of the VU meter appearance:

- **Color Scheme**: Modify the colors used for different audio levels (low, medium, high)

- **Response Time**: Adjust how quickly the meter responds to level changes

- **Scale**: Configure the decibel scale and range

- **Orientation**: Set horizontal or vertical orientation

Example of customizing a VU meter:

```cs

volumeMeter1.PeakHoldTime = 500; // Hold peak for 500ms

volumeMeter1.ColorNormal = Color.Green;

volumeMeter1.ColorWarning = Color.Yellow;

volumeMeter1.ColorAlert = Color.Red;

volumeMeter1.WarningThreshold = -12; // dB

volumeMeter1.AlertThreshold = -6; // dB

```

### Customizing Waveform Painters

Waveform painters can be customized to provide different visual representations:

- **Line Thickness**: Adjust the thickness of the waveform line

- **Color Gradient**: Apply color gradients based on amplitude

- **Time Scale**: Modify how much time is represented in the visible area

- **Rendering Mode**: Choose between different rendering styles (line, filled, etc.)

Example of customizing a waveform painter:

```cs

waveformPainter.LineColor = Color.SkyBlue;

waveformPainter.BackColor = Color.Black;

waveformPainter.LineThickness = 2;

waveformPainter.ScrollingSpeed = 50;

waveformPainter.RenderMode = WaveformRenderMode.FilledLine;

```

## Performance Considerations

When implementing audio visualization, consider these performance tips:

1. **Update Frequency**: Balance visual responsiveness with CPU usage by adjusting how frequently you update the visuals

2. **UI Thread Management**: Always update UI elements on the appropriate thread (especially important in WPF)

3. **Resource Cleanup**: Properly stop and clear visualization controls when not in use

4. **Buffering**: Consider implementing buffering for smoother visualization during high CPU usage

## Conclusion

Implementing VU meters and waveform painters adds valuable visual feedback to media applications. Whether you're developing in WinForms or WPF, these audio visualization components help users monitor and understand audio levels and patterns more intuitively.

By following the implementation steps outlined in this guide, you can enhance your .NET media applications with professional-quality audio visualization features that improve the overall user experience.

---

For more code examples and related SDKs, visit our [GitHub repository](https://github.com/visioforge/.Net-SDK-s-samples).

---END OF PAGE---

# Local File: .\dotnet\general\code-samples\zoom-onvideoframebuffer.md

---

title: Implementing Custom Zoom Effects in .NET Video Apps

description: Learn how to create custom zoom effects using the OnVideoFrameBuffer event in .NET video applications. This step-by-step guide provides detailed C# code examples, implementation techniques, and best practices for video frame manipulation in your applications.

sidebar_label: Custom Zoom Implementation with OnVideoFrameBuffer

---

# Implementing Custom Zoom Effects with OnVideoFrameBuffer in .NET

[!badge size="xl" target="blank" variant="info" text="Video Capture SDK .Net"](https://www.visioforge.com/video-capture-sdk-net) [!badge size="xl" target="blank" variant="info" text="Video Edit SDK .Net"](https://www.visioforge.com/video-edit-sdk-net) [!badge size="xl" target="blank" variant="info" text="Media Player SDK .Net"](https://www.visioforge.com/media-player-sdk-net)

## Introduction

Implementing custom zoom effects in video applications is a common requirement for developers working with video processing. This guide explains how to manually create zoom functionality in your .NET video applications using the OnVideoFrameBuffer event. This technique works across multiple SDK platforms, including Video Capture, Media Player, and Video Edit SDKs.

## Understanding the OnVideoFrameBuffer Event

The OnVideoFrameBuffer event is a powerful feature that gives developers direct access to video frame data during playback or processing. By handling this event, you can:

- Access raw frame data in real-time

- Apply custom modifications to individual frames

- Implement visual effects like zooming, rotation, or color adjustments

- Control video quality and performance

## Implementation Steps

The process of implementing a zoom effect involves several key steps:

1. Allocating memory for temporary buffers

2. Handling the OnVideoFrameBuffer event

3. Applying the zoom transformation to each frame

4. Managing memory to prevent leaks

Let's break down each of these steps with detailed explanations.

## Memory Management for Frame Processing

When working with video frames, proper memory management is critical. You'll need to allocate sufficient memory to handle frame data and temporary processing buffers.

```cs

private IntPtr tempBuffer = IntPtr.Zero;

IntPtr tmpZoomFrameBuffer = IntPtr.Zero;

private int tmpZoomFrameBufferSize = 0;

```

These fields serve the following purposes:

- `tempBuffer`: Stores the processed frame data

- `tmpZoomFrameBuffer`: Holds the intermediary zoom calculation results

- `tmpZoomFrameBufferSize`: Tracks the required size for the zoom buffer

## Detailed Code Implementation

Below is a complete implementation of the zoom effect using the OnVideoFrameBuffer event in a Media Player SDK .NET application:

```cs

private IntPtr tempBuffer = IntPtr.Zero;

IntPtr tmpZoomFrameBuffer = IntPtr.Zero;

private int tmpZoomFrameBufferSize = 0;

private void MediaPlayer1_OnVideoFrameBuffer(object sender, VideoFrameBufferEventArgs e)

{

// Initialize the temporary buffer if it hasn't been created yet

if (tempBuffer == IntPtr.Zero)

{

tempBuffer = Marshal.AllocCoTaskMem(e.Frame.DataSize);

}

// Set the zoom factor (2.0 = 200% zoom)

const double zoom = 2.0;

// Apply the zoom effect using the FastImageProcessing utility

FastImageProcessing.EffectZoom(

e.Frame.Data, // Source frame data

e.Frame.Width, // Frame width

e.Frame.Height, // Frame height

tempBuffer, // Output buffer

zoom, // Horizontal zoom factor

zoom, // Vertical zoom factor

0, // Center X coordinate (0 = center)

0, // Center Y coordinate (0 = center)

tmpZoomFrameBuffer, // Intermediate buffer

ref tmpZoomFrameBufferSize); // Buffer size reference

// Allocate the zoom frame buffer if needed and return to process in next frame

if (tmpZoomFrameBufferSize > 0 && tmpZoomFrameBuffer == IntPtr.Zero)

{

tmpZoomFrameBuffer = Marshal.AllocCoTaskMem(tmpZoomFrameBufferSize);

return;

}

// Copy the processed data back to the frame buffer

FastImageProcessing.CopyMemory(tempBuffer, e.Frame.Data, e.Frame.DataSize);

}

```

## Customizing the Zoom Effect

The code above uses a fixed zoom factor of 2.0 (200%), but you can modify this to create various zoom effects:

### Dynamic Zoom Levels

You can implement user-controlled zoom by replacing the constant zoom value with a variable:

```cs

// Replace this:

const double zoom = 2.0;

// With something like this:

double zoom = this.userZoomSlider.Value; // Get zoom value from UI control

```

### Zoom with Focus Point

The `EffectZoom` method accepts X and Y coordinates to set the center point of the zoom. Setting these to non-zero values allows you to focus the zoom on specific areas:

```cs

// Zoom centered on the top-right quadrant

FastImageProcessing.EffectZoom(

e.Frame.Data,

e.Frame.Width,

e.Frame.Height,

tempBuffer,

zoom,

zoom,

e.Frame.Width / 4, // X offset from center

-e.Frame.Height / 4, // Y offset from center

tmpZoomFrameBuffer,

ref tmpZoomFrameBufferSize);

```

## Performance Considerations

When implementing custom video effects like zooming, consider these performance tips:

1. **Memory Management**: Always free allocated memory when your application closes to prevent leaks

2. **Buffer Reuse**: Reuse buffers when possible rather than reallocating for each frame

3. **Processing Time**: Keep processing time minimal to maintain smooth video playback

4. **Resolution Impact**: Higher resolution videos require more processing power for real-time effects

## Cleaning Up Resources

To properly clean up resources when your application closes, implement a cleanup method:

```cs

private void CleanupZoomResources()

{

if (tempBuffer != IntPtr.Zero)

{

Marshal.FreeCoTaskMem(tempBuffer);

tempBuffer = IntPtr.Zero;

}

if (tmpZoomFrameBuffer != IntPtr.Zero)

{

Marshal.FreeCoTaskMem(tmpZoomFrameBuffer);

tmpZoomFrameBuffer = IntPtr.Zero;

}

}

```

Call this method when your form or application closes to prevent memory leaks.

## Troubleshooting Common Issues

When implementing the zoom effect, you might encounter these issues:

1. **Distorted Image**: Check that your zoom factors for width and height are equal for uniform scaling

2. **Blank Frames**: Ensure proper memory allocation and buffer sizes

3. **Poor Performance**: Consider reducing the frame processing complexity or the video resolution

4. **Memory Errors**: Verify that all memory is properly allocated and freed

## Conclusion

Implementing custom zoom effects using the OnVideoFrameBuffer event gives you precise control over video appearance in your .NET applications. By following the techniques outlined in this guide, you can create sophisticated zoom functionality that enhances the user experience in your video applications.

Remember to properly manage memory resources and optimize for performance to ensure smooth playback with your custom effects.

---

Visit our [GitHub](https://github.com/visioforge/.Net-SDK-s-samples) page to get more code samples.

---END OF PAGE---

# Local File: .\dotnet\general\code-samples\zoom-video-multiple-renderer.md

---

title: Setting Zoom Parameters for Multiple Video Renderers